- #Beautiful soup github webscraper how to#

- #Beautiful soup github webscraper install#

- #Beautiful soup github webscraper full#

- #Beautiful soup github webscraper code#

I am facing error in 2 lines which is commented in the code. "NoneType' object has no attribute 'text'" headlines soup.findall('a', class'storylink') Make sure to use class instead of class as class is a.

After this, we can extract any data from this soup variable. Hence, I have decided to scrape the web for my own dataset. GitHub - abhishekvispute/WebScraper: To scrape multiple web pages with Python.

#Beautiful soup github webscraper how to#

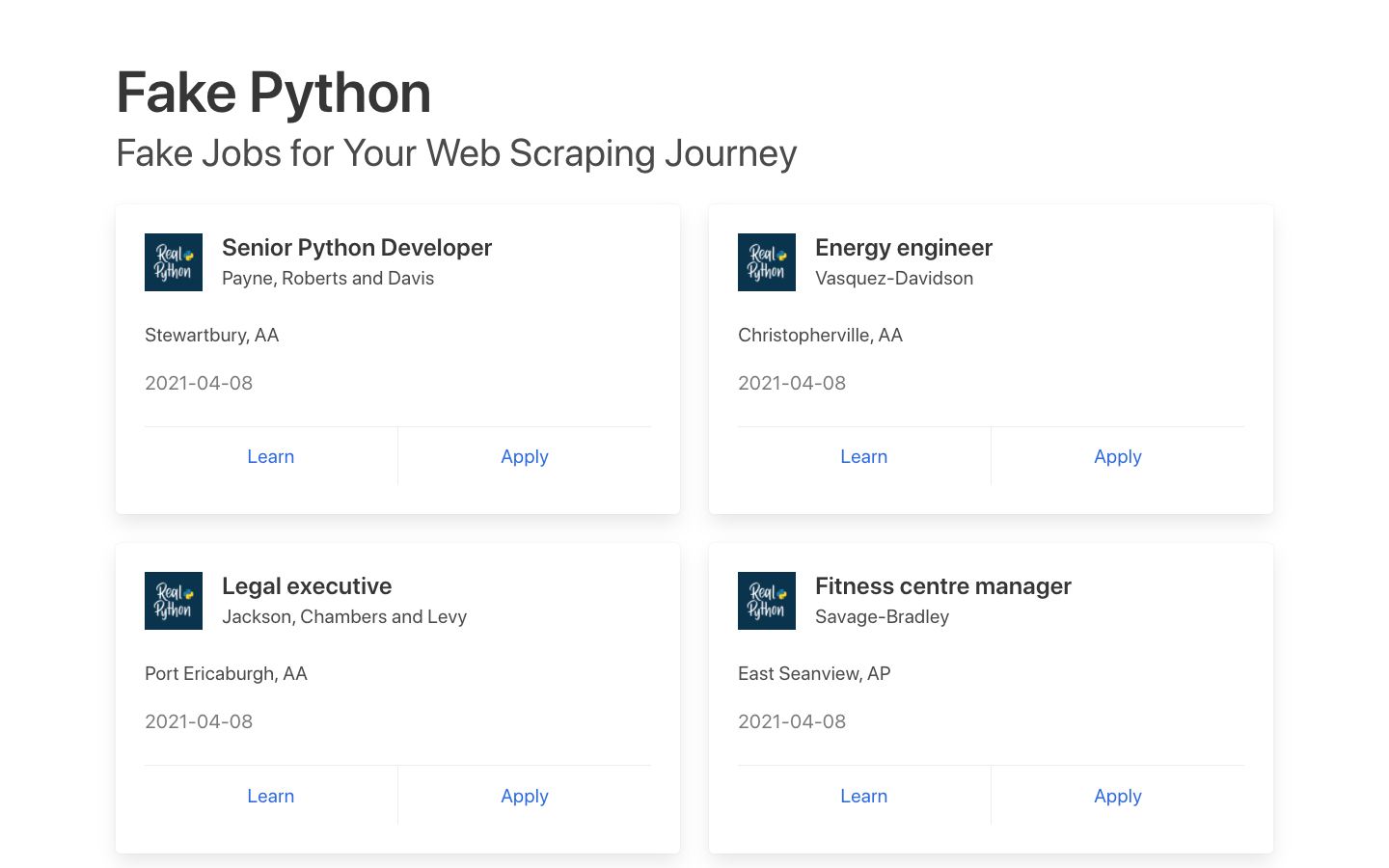

You can also use lxml but I find html.parser simpler. This article focuses on how to use Beautiful Soup for extracting information from the Poetry Foundation. To scrape multiple web pages with Python using BeautifulSoup and requests.

#Beautiful soup github webscraper install#

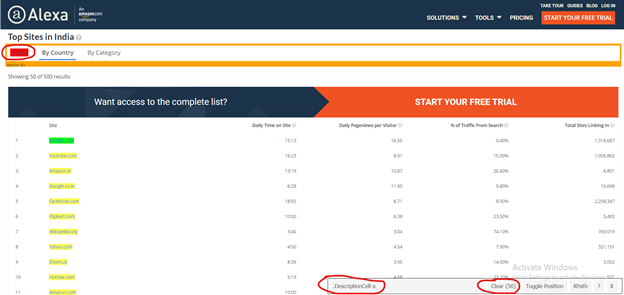

We can install Beautiful Soup at the command. In this lab, we will be focusing on using Beautiful Soup to parse and scrape web pages built using HTML and CSS. HTML, XML, etc) to extract information we can work with in a Python programming environment.

#Beautiful soup github webscraper code#

Please feel free to read and participate in the discussions with your comments.This is python code for web scraping content from github repositories using BeautifulSoup library. soup BeautifulSoup(rawData, 'html.parser') The html.parser tells beautifulsoup to parse the raw HTML with html.parser. Beautiful Soup uses the structure of markup language documents (i.e. BeautfulSoup with the help of a parser transforms a complex HTML document into a complex tree of Python objects. These tutorials are frequently linked to as StackOverflow solutions and discussed on Reddit. Beautiful Soup is a Python library for pulling data out of HTML and XML format like above. Using Selenium is an (almost) sure-fire way of being able to generate any of the dynamic content that you need, because the pages are actually visited by a browser (albeit one controlled by Python rather than you). The community that has coalesced around these tutorials and their comments help anyone from a beginner hobbyist person to an advanced programmer solve some of the issues they face with web scraping. The output in the notebook is an empty list, because javascript hasn't generated the items yet. Scrapy is an open source tool with 33.9K GitHub stars and 7.95K GitHub forks. We also provide various in-depth articles about Web Scraping tips, techniques and the latest technologies which include the latest anti-bot technologies, methods used to safely and responsibly gather publicly available data from the Internet. BeautifulSoup - A Python library for pulling data out of HTML and XML files.

#Beautiful soup github webscraper full#

The full source code is also available to download in most cases or available to be easily cloned using Git. Our web scraping tutorials are usually written in Python using libraries such as LXML, Beautiful Soup, Selectorlib and occasionally in Node.js. LEARN HOW TO USE WEB SCRAPING TO ENHANCE PRODUCTIVITY AND AUTOMATION We provide many step-by-step tutorials with source code for web scraping, web crawling, data extraction, headless browsers, etc.

0 kommentar(er)

0 kommentar(er)